Every higher ed leader I talk with asks some version of the same question:

When will we actually see value from AI and what will that look like?

The anticipation is real. McKinsey reports that 65% of organizations now use generative AI, nearly double from just ten months earlier. Yet most leaders admit they’re not sure the value is there. Adoption is high, but outcomes are uncertain.

That gap is exactly what we need to explore.

1. Why Most AI Efforts Fail

S&P Global recently reported that 42% of companies abandoned most of their AI initiatives, and nearly half of AI pilots (46%) were scrapped before reaching production.

These aren’t just corporate issues. Higher education faces the same trap: treating AI like another system, another dashboard, another piece of software to procure and monitor. But AI isn’t a system. It’s a way of working.

And if leaders don’t embrace it that way, failure rates will only grow.

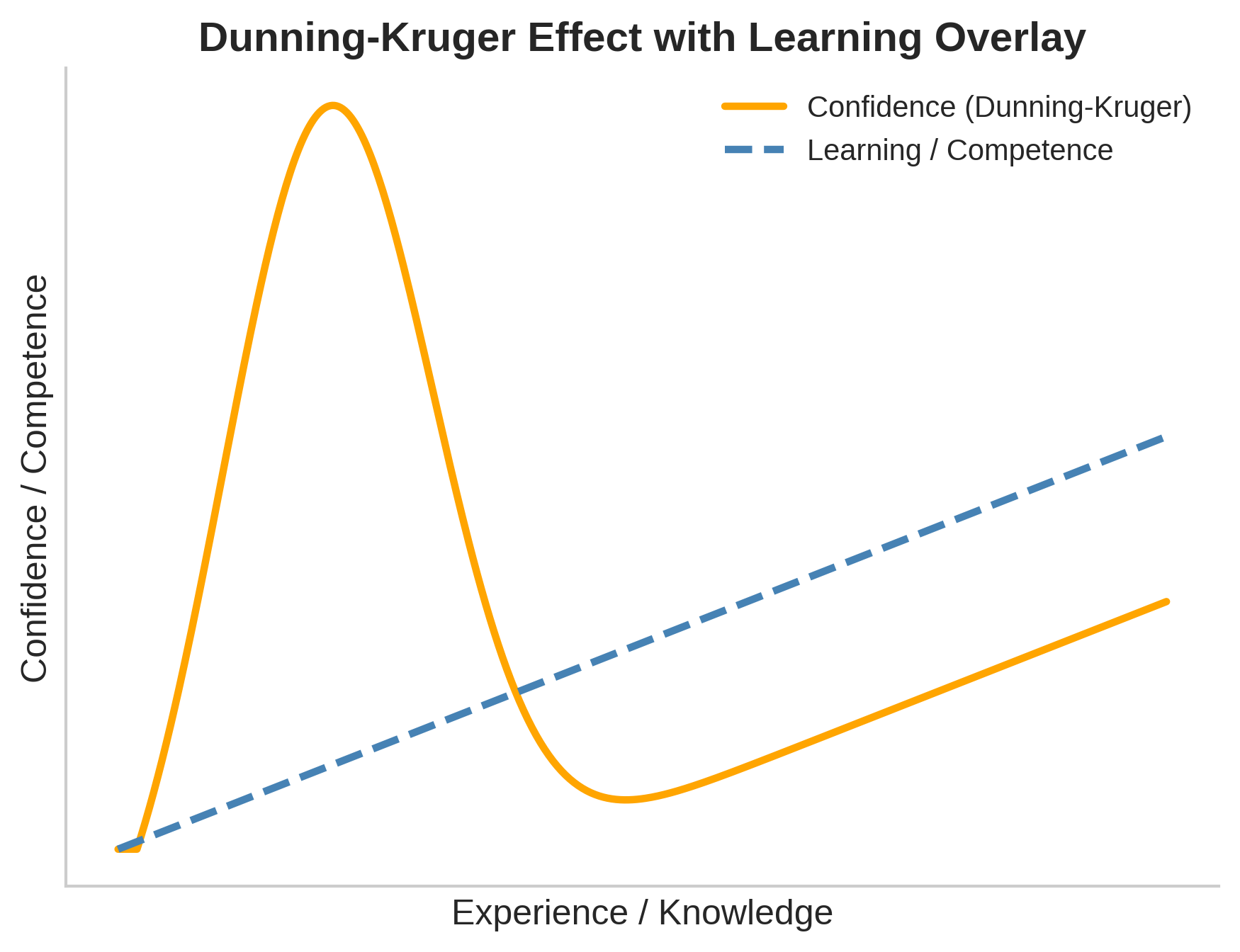

2. The Dunning–Kruger Trap for Higher Ed Leaders

In CRM and SIS projects, leaders often survive at arm’s length. They don’t “use” the system directly — they consume dashboards and reports while front-line staff do the hands-on work.

AI changes that dynamic. It requires leaders to use it themselves.

The problem is, most leaders start with misplaced confidence. They believe they can form AI committees, debate governance, and even set policy without ever having experimented personally. That’s the peak of Mount Stupid on the Dunning–Kruger curve.

Then comes the valley of despair: the realization that AI is far more complex than expected, coupled with the uncomfortable truth that decisions already made — about governance, policy, or pilots — may have been uninformed.

The only way out is through use. Leaders have to pick up the tools, test them in their own workflows, and develop the literacy to lead responsibly.

3. The Georgia State Example

Georgia State University’s use of conversational AI (through Mainstay, formerly AdmitHub) is widely celebrated. Their AI nudges increased FAFSA filing and registration, boosting fall enrollment by ~3 percentage points — about 1,300 additional students.

What’s often left out of the headline: Georgia State had been using the same AI platform for more than five years before those results scaled.

The lesson is critical. AI value doesn’t appear in a single semester. It compounds over years of learning, iteration, and growing comfort with the tools.

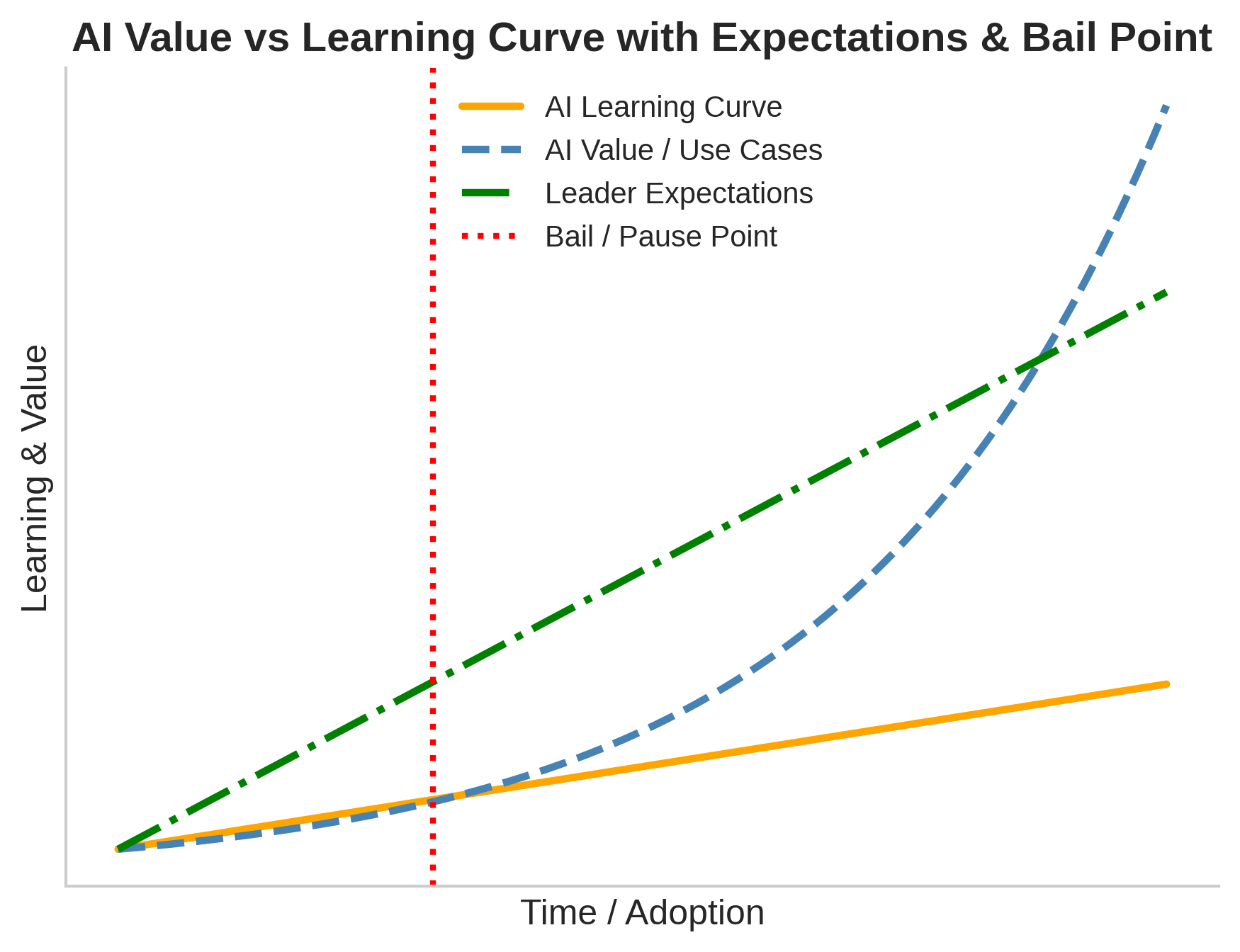

4. The AI Learning vs. Value Curve

Dharmesh Shah has shared a helpful framing: while the AI learning curve is steady, the value curve accelerates later.

The danger is that many institutions start debating AI strategy at the wrong time — too early in their learning curve. Leaders who don’t yet know the tools well enough to judge them end up launching the wrong pilots, under-supporting the work, and being disappointed in the results

The path forward is simple but demanding:

- Build literacy first.

- Let leaders and staff actually use AI.

- Then debate where it belongs in strategy and governance.

The payoff: once the learning curve is climbed, the value curve can bend sharply upward.

5. The First Step

So here’s the question: Are you personally using AI in your daily work?

If the answer is no, you can’t make good decisions about AI policy, governance, or strategy.

The first step toward an AI-driven institution is building the augmented workforce. That starts with leadership. Leaders must see value in their own work first — writing, analysis, planning, communication — before they can authentically scale it to their teams.

And the good news: step one doesn’t need to be fully complete before step two begins. Once leaders start, the momentum carries forward.

AI isn’t a system. It’s a way of working. Institutions that embrace that truth will gain not just 2X — but in some cases 10X — productivity, clarity, and speed to output.

.png)